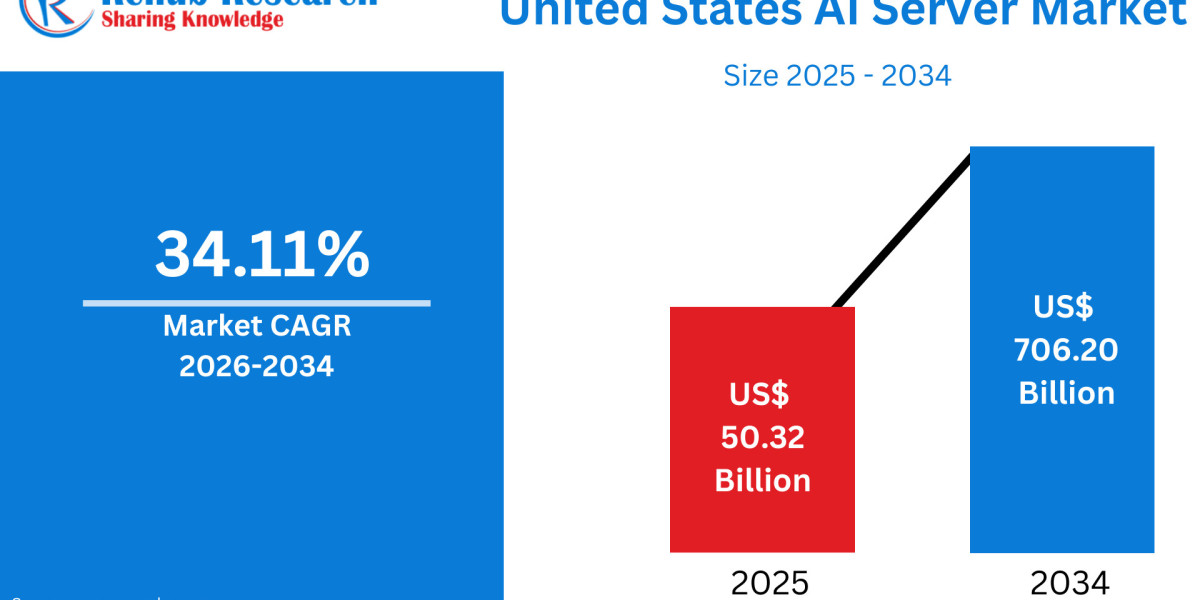

United States AI Server Market Size & Forecast 2026–2034

According to Renub Research United States AI server market is poised for extraordinary growth during the forecast period from 2026 to 2034. The market is projected to expand from a valuation of US$ 50.32 billion in 2025 to approximately US$ 706.20 billion by 2034, registering an exceptional compound annual growth rate (CAGR) of 34.11%. This rapid expansion is driven by the explosive growth of artificial intelligence workloads across hyperscale data centers, cloud service platforms, enterprise IT environments, and research institutions. The accelerating adoption of generative AI, high-performance computing (HPC), and big data analytics, combined with heavy investments in GPU- and accelerator-based infrastructure, is positioning AI servers as one of the most critical pillars of the U.S. digital economy.

Download Free Sample Report:https://www.renub.com/request-sample-page.php?gturl=united-states-ai-server-market-p.php

United States AI Server Market Overview

An AI server is a high-performance computing system purpose-built to handle artificial intelligence workloads such as machine learning, deep learning, natural language processing, computer vision, and generative AI. Unlike conventional enterprise servers, AI servers integrate powerful accelerators including GPUs, TPUs, FPGAs, and custom ASICs to support massively parallel processing of large datasets at very high speeds. These servers also feature high-bandwidth memory, ultra-fast storage architectures, and low-latency networking to enable distributed training and real-time inference at scale.

The United States leads the global AI server market due to its dominance in artificial intelligence research, cloud computing, semiconductor innovation, and advanced digital infrastructure. Technology giants, cloud hyperscalers, startups, government agencies, and research laboratories rely heavily on AI servers to train large language models, analyze massive datasets, and deploy AI-driven applications across industries. As AI becomes deeply embedded in business operations and public services, AI servers are emerging as a foundational component of the country’s long-term technological competitiveness.

Explosion of AI Adoption Across Industries

The rapid and widespread adoption of artificial intelligence across nearly all major industry verticals is a primary growth driver for the U.S. AI server market. Enterprises deploy AI for recommendation systems, fraud detection, customer personalization, predictive maintenance, automation, and decision intelligence. In sectors such as finance, retail, manufacturing, logistics, and government, AI initiatives are moving beyond experimentation into full-scale production environments.

This shift requires resilient, scalable, and high-performance infrastructure capable of supporting continuous training and inference. The proliferation of data generated from IoT devices, mobile platforms, enterprise software, and connected systems further accelerates the need for in-house and cloud-based AI servers. GPU-accelerated servers, supported by mature software ecosystems, have become the backbone of AI deployment strategies across the United States.

Generative AI and Large Language Models as Growth Engines

Generative AI and large language models (LLMs) represent one of the most powerful catalysts for growth in the U.S. AI server market. Training and fine-tuning large models requires enormous computational capacity, high-bandwidth memory, and fast interconnects that only specialized AI servers can provide. Enterprises increasingly seek to customize foundation models using proprietary data, driving demand for dedicated and hybrid AI server environments rather than reliance on shared public cloud resources alone.

Inference workloads are also scaling rapidly as LLMs are embedded into productivity tools, customer service platforms, software development pipelines, healthcare diagnostics, and creative applications. This expansion creates sustained demand for dense clusters of AI servers deployed in centralized data centers as well as edge locations. The commercialization of generative AI ensures long-term momentum for AI server investments throughout the United States.

Government, Regulatory, and Security Considerations

Public policy, regulation, and national security considerations play an important role in accelerating AI server adoption in the United States. Sensitive workloads involving defense, healthcare records, financial data, and intellectual property often require on-premises or sovereign AI infrastructure rather than exclusive reliance on multi-tenant public clouds. Increasing regulations related to data residency, AI transparency, and model governance are prompting organizations to invest in dedicated AI servers that provide greater control and auditability.

Cybersecurity requirements further boost demand, as AI-powered threat detection, fraud analytics, and behavioral monitoring systems depend on high-performance compute. In addition, government funding and incentives for AI research, domestic semiconductor manufacturing, and critical infrastructure development are supporting the large-scale build-out of AI-ready data centers across the country.

High Capital and Operating Costs

Despite strong growth prospects, high capital and operating costs remain a major challenge in the U.S. AI server market. Advanced GPU-based and accelerator-driven servers are extremely expensive, and large deployments require significant investments in networking, storage, power delivery, and cooling infrastructure. For many enterprises, justifying the upfront costs can be difficult, particularly when AI use cases are still evolving or returns on investment are uncertain.

Operational expenses also pose challenges. AI servers consume substantially more power and generate higher heat loads than traditional servers, increasing electricity costs and creating complex cooling requirements. Data centers may need upgrades to power distribution systems, backup infrastructure, and thermal management solutions, which can lengthen deployment timelines and increase total cost of ownership.

Skills Gaps and Integration Complexity

Another key constraint in the U.S. AI server market is the shortage of skilled professionals capable of deploying and managing AI infrastructure. AI workloads require expertise in machine learning frameworks, distributed training, container orchestration, and high-performance networking. Integrating AI servers into legacy IT environments often exposes limitations in data pipelines, storage performance, and security architectures.

Organizations may experience underutilized resources or extended implementation timelines due to software-hardware compatibility challenges and operational complexity. These factors can slow adoption, especially among mid-sized enterprises and traditional industries transitioning to AI-driven operations.

United States GPU-Based AI Server Market

GPU-based AI servers represent the dominant segment of the U.S. AI server market. GPUs are ideally suited for deep learning and generative AI workloads due to their massively parallel architecture and mature software ecosystem. Hyperscalers, SaaS providers, and enterprises standardize on GPU-accelerated platforms to support frameworks such as PyTorch and TensorFlow.

Continuous innovation in GPU architecture, memory bandwidth, and interconnect technologies ensures that GPU-based servers remain central to AI infrastructure strategies across the United States.

United States ASIC-Based AI Server Market

ASIC-based AI servers are emerging as a targeted, high-efficiency alternative for specific workloads. These custom-designed accelerators are optimized for tasks such as inference, recommendation engines, and video analytics. Their primary advantage lies in improved performance-per-watt and lower total cost of ownership for predictable, large-scale workloads.

Cloud providers and major internet companies are early adopters of ASIC-based AI servers, often developing proprietary chips tailored to their platforms. While this segment remains smaller than GPUs, it is expected to grow steadily as AI workloads mature.

United States Cooling Technology Trends in AI Servers

Thermal management is a critical consideration in AI server deployment. Air cooling remains the most widely used approach in existing data centers due to familiarity and lower upfront costs. However, as power densities increase, hybrid cooling solutions combining air and liquid cooling are gaining traction. These systems enable higher rack densities while minimizing the need for complete facility redesigns, offering improved thermal efficiency and lower power usage effectiveness.

United States AI Server Form Factor Trends

Rack-mounted AI servers dominate large-scale data centers due to their scalability and compatibility with hyperscale environments. Blade servers appeal to enterprises seeking dense, modular compute with centralized management, while tower servers address edge and smaller enterprise use cases where full data center infrastructure is impractical. Each form factor supports different deployment strategies within the broader AI ecosystem.

United States BFSI AI Server Market

In the BFSI sector, AI servers underpin mission-critical applications such as fraud detection, algorithmic trading, credit scoring, and risk modeling. Low latency, high security, and regulatory compliance drive financial institutions to deploy dedicated AI servers on-premises or in private clouds, ensuring control over sensitive data and models.

United States Healthcare & Pharmaceutical AI Server Market

Healthcare and pharmaceutical organizations are among the largest adopters of AI servers in the United States. AI supports medical imaging, clinical decision support, genomics, drug discovery, and trial optimization. These workloads require both high compute capacity and strict privacy controls, driving investment in on-premises and private-cloud AI server infrastructure.

United States Automotive AI Server Market

The automotive industry relies heavily on AI servers for vehicle design, manufacturing, connected services, and autonomous driving development. Large GPU clusters train perception and planning models, while AI supports simulation, quality inspection, and supply chain optimization. As vehicles become software-defined, backend AI servers process vast volumes of telematics and sensor data.

Regional Analysis of the U.S. AI Server Market

California remains the epicenter of AI server demand due to its concentration of hyperscalers, AI startups, and semiconductor companies. New York’s market is driven by financial services and low-latency trading applications, while Texas is emerging as a major AI server hub due to favorable energy economics, abundant land, and expanding enterprise data center investments.

Market Segmentation Overview

The United States AI server market is segmented by type into GPU-based, FPGA-based, and ASIC-based servers. By cooling technology, the market includes air cooling, liquid cooling, and hybrid cooling. Form factors include rack-mounted servers, blade servers, and tower servers. End-use industries span IT and telecommunications, BFSI, retail and e-commerce, healthcare and pharmaceuticals, automotive, and others, with demand distributed across major U.S. states.

Competitive Landscape and Company Analysis

The U.S. AI server market is highly competitive and innovation-driven, with leading players including Dell Inc., Cisco Systems, Inc., IBM Corporation, HP Development Company, L.P., Huawei Technologies Co., Ltd., NVIDIA Corporation, Fujitsu Limited, ADLINK Technology Inc., Lenovo Group Limited, and Super Micro Computer, Inc.. These companies compete through performance innovation, ecosystem partnerships, and end-to-end AI infrastructure solutions, shaping the future of the United States AI server market.